NVIDIA MGX and GH200

Total Solutions Engineered by AMAX

NVIDIA MGX with AMAX

Unlock the full potential of your data center with NVIDIA MGX—an open, multi-generational reference architecture focused on accelerated computing. Utilizing the new GH200 Grace Hopper Superchip, NVIDIA MGX ushers in a new era of supercomputing performance at scale.

Globally, data centers represent a $1 trillion industry, yet most still rely on unaccelerated CPU systems and basic networking. MGX utilizes the new NVIDIA Grace™ CPU Superchip, combining both CPU and GPU into a coherent memory model to meet the increasing demands of AI and HPC.

At AMAX, our expert engineers are equipped to modernize your data center and tailor an NVIDIA MGX solution to your requirements. By combining high-speed networking, liquid cooling, optimized connectivity and storage ratios, we design solutions for peak performance and improved ROI.

Design the data center of your dreams with NVIDIA MGX

At AMAX, we craft and engineer your enterprise data center or production LLM solution the way you want with NVIDIA MGX™—an open, multi-generational reference architecture focused on accelerated computing for AI. With over 100+ standard configurations, and even more possible with our design frameworks, your ideal data center is now within reach.

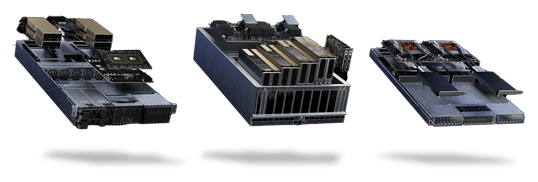

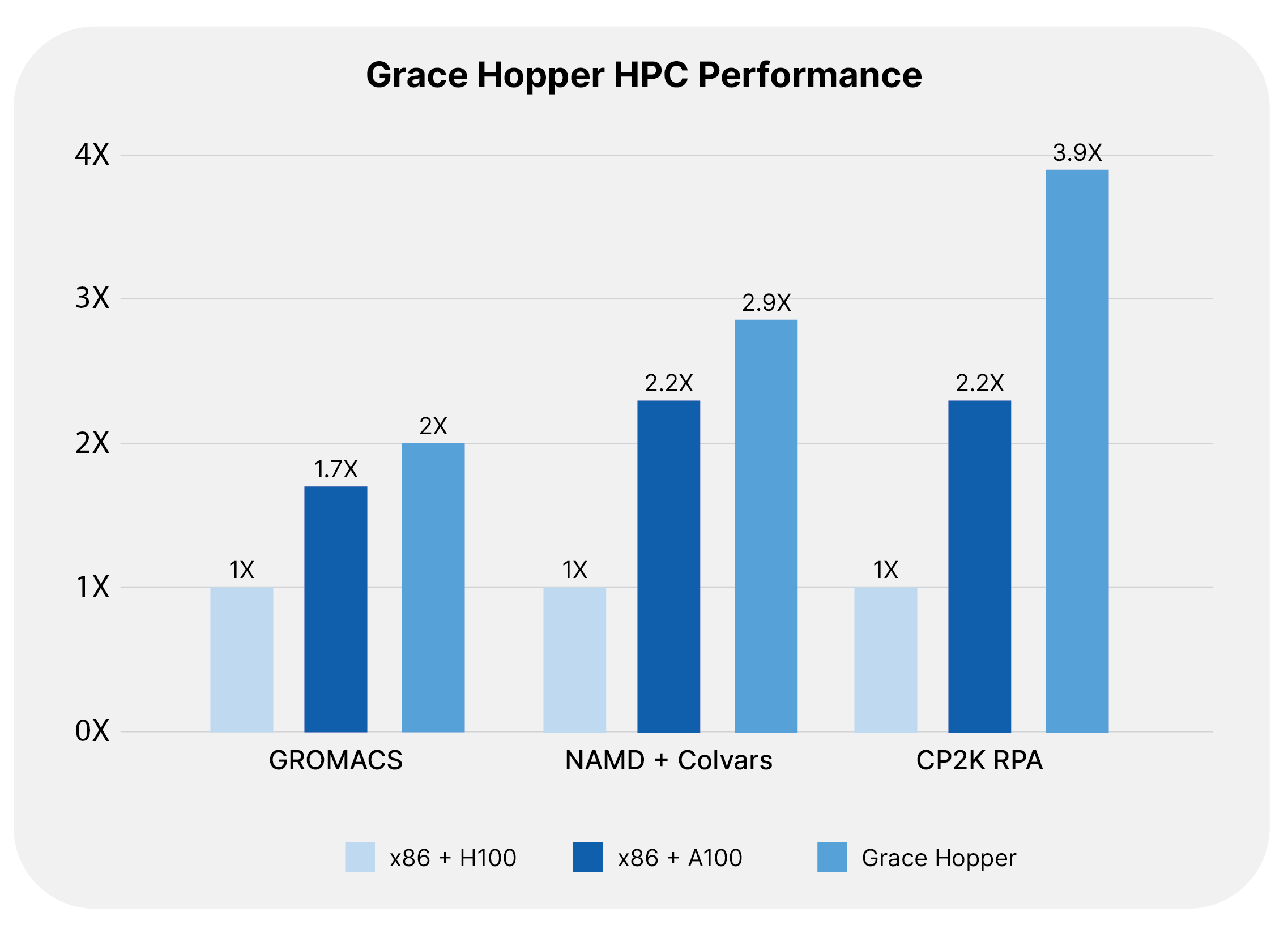

Explore what is possible to build with Grace Hopper SuperChips – the GH200 architecture that combines a revolutionary Grace chip with an H100 GPU.

Explore MGX

Utilizing the new NVIDIA GH200 Grace Hopper™ Superchip and NVIDIA Grace™ CPU Superchip. NVIDIA MGX ushers in a new wave of custom supercomputing at scale.

With AMAX’s advanced liquid cooling technology, high-speed networking, and scalable storage, our NVIDIA MGX solutions are optimized for continuous peak performance and immediate ROI.

NVIDIA Modular MGX Systems

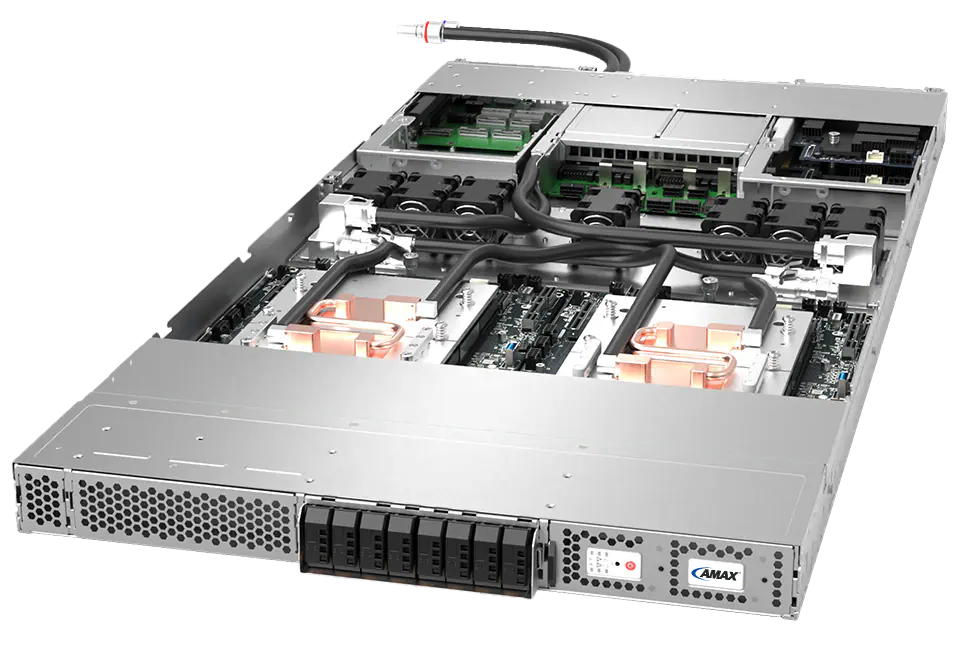

AceleMax® N-224-MG

Air cool 2U NVIDIA Grace Super Chip supports 4x double-width GPU and 8x

E1.S NVMe drives

AceleMax® X-224-MG

Air cool 2U DP Intel 4th / 5th Gen Xeon supports 4x double-width GPU and 8x

E1.S NVMe drives

AceleMax® N-111-MGH

1U NVIDIA Grace Hopper Super Chip with air cool and liquid cool support

AceleMax® N-122-MGH

Liquid cooling 1U 2 Nodes with either NVIDIA Grace Hopper Super Chip or Grace Super Chip

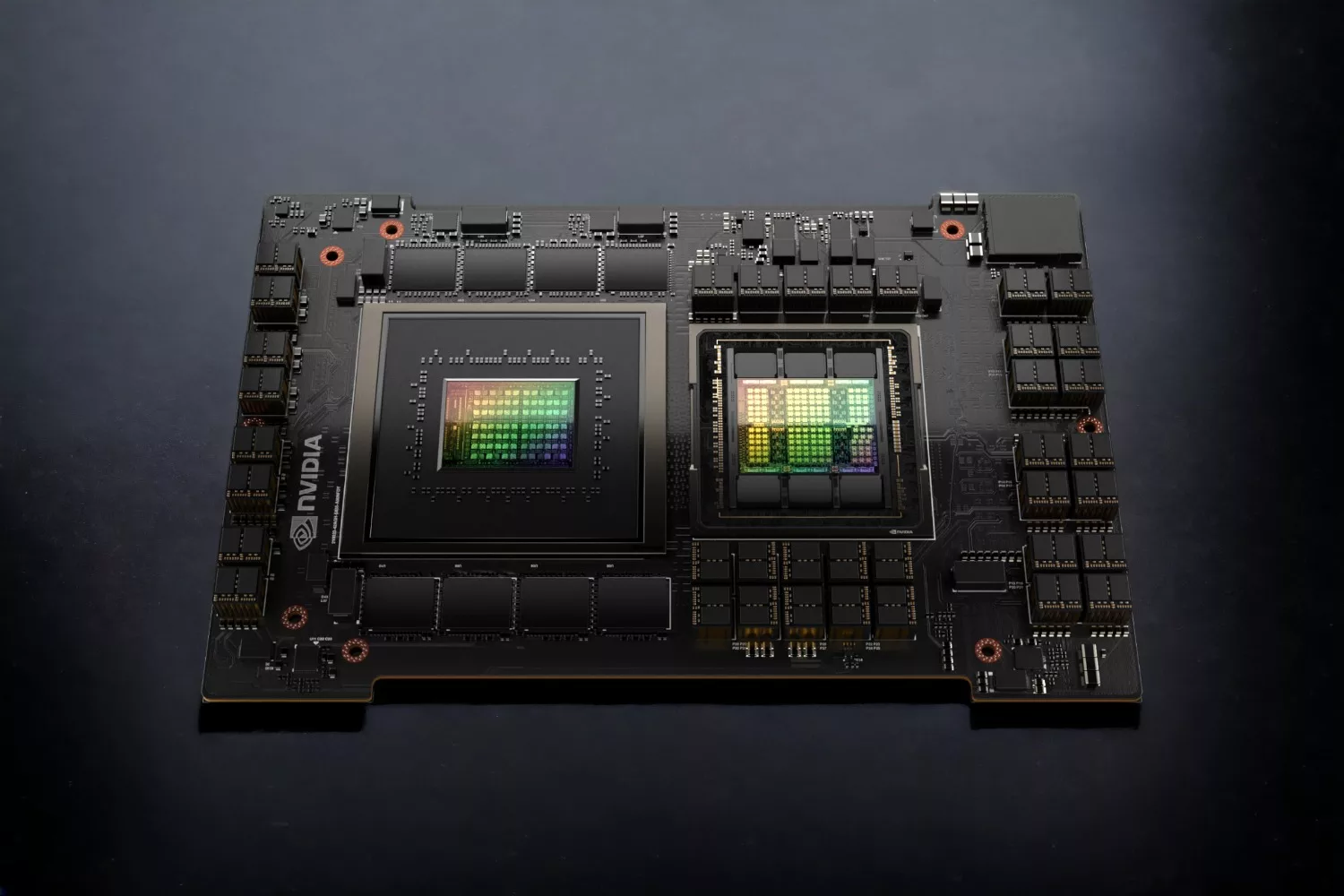

NVIDIA Grace Hopper Superchip

A first of its kind CPU+GPU

Key Features:

- 72-core Grace CPU

- NVIDIA H100 Tensor Core GPU

- Up to 480GB of LPDDR5X memory with error-correction code (ECC)

- Supports 96GB of HBM3 or 144GB of HBM3e

- Up to 624GB of fast-access memory

- NVLink-C2C: 900GB/s coherent memory

2X

Performance per watt compared

to x86-64 platforms

9X

Faster AI Training

30X

Faster AI Inference

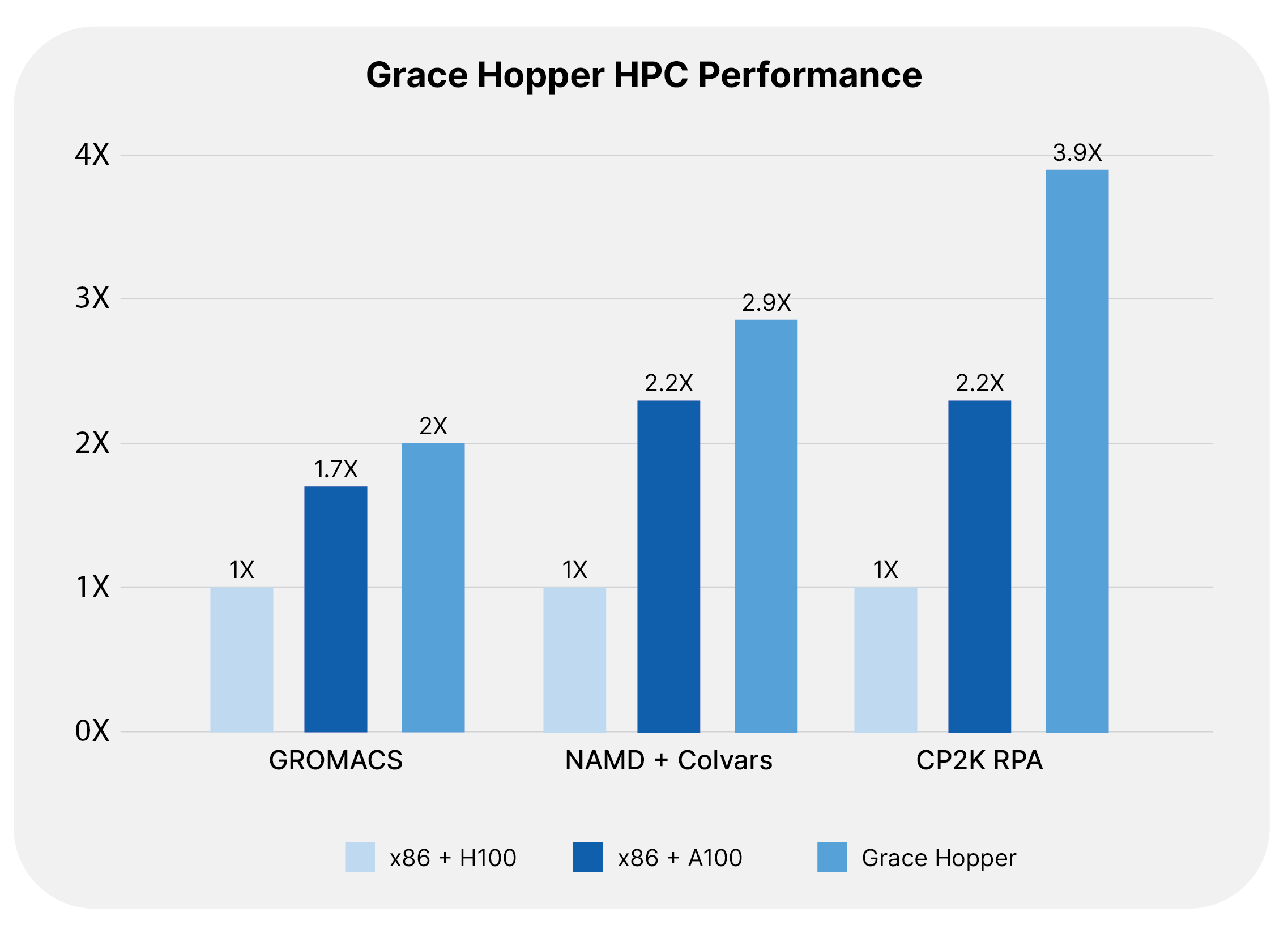

NVIDIA’s GH200 Grace Hopper Superchip is a powerhouse for AI and high-performance computing. It fuses the Hopper GPU and Grace CPU, linked by the ultra-fast NVLink-C2C interconnect with 900GB/s bandwidth—7X higher than standard PCIe Gen5. The chip supports up to 480GB LPDDR5X CPU memory, enabling direct GPU access to a vast pool of fast memory. It’s deployable in standard servers and can be scaled up to 256 NVLink-connected GPUs with access to 144TB of high-bandwidth memory.

NVIDIA’s GH200 Grace Hopper Superchip is a powerhouse for AI and high-performance computing. It fuses the Hopper GPU and Grace CPU, linked by the ultra-fast NVLink-C2C interconnect with 900GB/s bandwidth—7X higher than standard PCIe Gen5. The chip supports up to 480GB LPDDR5X CPU memory, enabling direct GPU access to a vast pool of fast memory. It’s deployable in standard servers and can be scaled up to 256 NVLink-connected GPUs with access to 144TB of high-bandwidth memory.

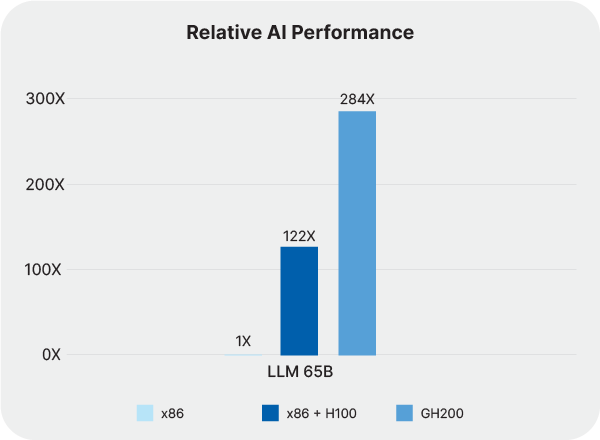

Companies require a multifaceted platform to manage expansive models and unlock the full capabilities of their existing hardware. The GH200 Grace Hopper Superchip offers a sevenfold increase in rapid-access memory compared to conventional accelerated inference systems, along with a substantial uptick in FLOPs when compared to CPU-based inference methods.

This superchip is compatible with NVIDIA MGX™ server architectures, enabling the creation of optimal TCO scale-out frameworks for AI inference. It also boasts a performance factor up to 284 times greater than CPU solutions, making it ideal for applications like Large Language Models, recommendation engines, vector databases, and beyond.

Companies require a multifaceted platform to manage expansive models and unlock the full capabilities of their existing hardware. The GH200 Grace Hopper Superchip offers a sevenfold increase in rapid-access memory compared to conventional accelerated inference systems, along with a substantial uptick in FLOPs when compared to CPU-based inference methods.

This superchip is compatible with NVIDIA MGX™ server architectures, enabling the creation of optimal TCO scale-out frameworks for AI inference. It also boasts a performance factor up to 284 times greater than CPU solutions, making it ideal for applications like Large Language Models, recommendation engines, vector databases, and beyond.

Flexible Reference Design

No two data centers are alike. Whether you’re operating in hyperscale, edge, or high-performance computing environments, NVIDIA MGX provides an array of choices to match diverse demands, from CPUs, GPUs, DPUS, and storage options.

CPU

Grace & Grace Super Chip

x86 CPUs

GPU

L40 Tensor Core

H100 Tensor Core

DPU

Bluefield®

ConnectX®-7

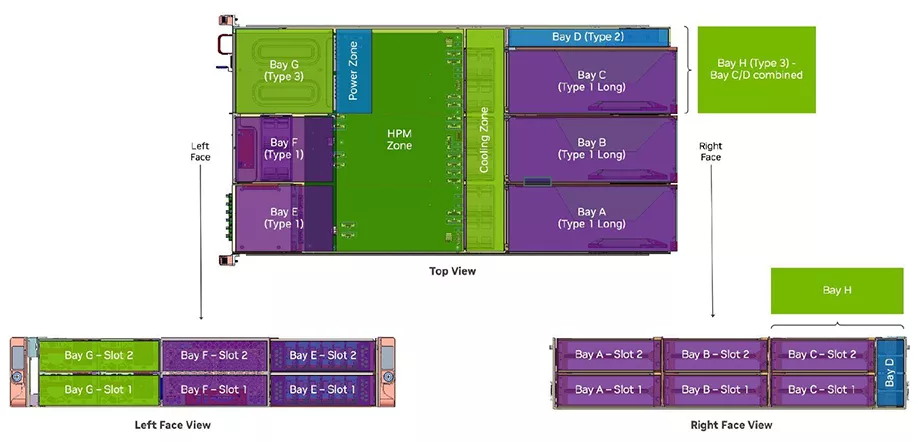

Modular Bays

Modular bays serve as the cornerstone of our advanced architecture, designed to adapt to both present and upcoming technology form factors common in the industry. The array of bay module sizes are specifically configured to accommodate varying chassis dimensions and technology layouts to cater to a variety of use cases.

Bay Module Width Categories

Type 1: Accommodates full-height PCIe AIC featuring a standard faceplate. Type 2: Designed for EDSFF storage or power input connectors.

Type 3: Expands to cover the width of Type 1 and Type 2, including the divider. This type can hold two CRPS PSUs side by side with extra space for a pass-through power cable.

Bay Module Length Specifications

Short: Designed for half-length PCIe AICs and supports E1.S, E2.S, and U.2 drives. Long: Can hold a full-length PCIe AIC, up to 12.3 inches.

Bay Module Height Options

1RU: Suitable for a system with a height of 1RU. 2RU: Fits systems with a height of 2RU.

4RU: Compatible with systems with a height of 4RU.

Chassis Design

The chassis is engineered with standard locking and shelving systems compatible across bay module types. A 2RU bay module can seamlessly replace two 1RU bay modules. The same interchangeability applies between Type 3, Type 1, and Type 2 bays.

Multi-Level Bays: 1RU bays are height-optimized for 1RU systems but can be stacked in taller systems. A 2RU bay provides the flexibility to place devices vertically, offering optimized use of space.

Secure Bay-to-Chassis Connection: Bay modules are securely fastened to the chassis using one of two methods—either an internal spring latch and catch system or an external camming latch handle. Both methods ensure the bay modules are sturdily anchored to the chassis.

Chassis Design

The chassis is engineered with standard locking and shelving systems compatible across bay module types. A 2RU bay module can seamlessly replace two 1RU bay modules. The same interchangeability applies between Type 3, Type 1, and Type 2 bays.

Multi-Level Bays: 1RU bays are height-optimized for 1RU systems but can be stacked in taller systems. A 2RU bay provides the flexibility to place devices vertically, offering optimized use of space.

Secure Bay-to-Chassis Connection: Bay modules are securely fastened to the chassis using one of two methods—either an internal spring latch and catch system or an external camming latch handle. Both methods ensure the bay modules are sturdily anchored to the chassis.

Enhanced Compatibility

Beyond the hardware, MGX also benefits from the extensive NVIDIA software stack, providing developers and businesses the resources they need for AI, HPC, and various other applications. This encompasses NVIDIA AI Enterprise, a critical component of the NVIDIA AI platform, furnished with over 100 frameworks, pre-trained models, and development utilities for robust, enterprise-level AI and data science endeavors.

For seamless adaptation into your existing infrastructure, MGX is designed to be compatible with both Open Compute Project standards and Electronic Industries Alliance server racks, ensuring swift deployment in both enterprise and cloud-based data centers.

Enhanced Compatibility

Beyond the hardware, MGX also benefits from the extensive NVIDIA software stack, providing developers and businesses the resources they need for AI, HPC, and various other applications. This encompasses NVIDIA AI Enterprise, a critical component of the NVIDIA AI platform, furnished with over 100 frameworks, pre-trained models, and development utilities for robust, enterprise-level AI and data science endeavors.

For seamless adaptation into your existing infrastructure, MGX is designed to be compatible with both Open Compute Project standards and Electronic Industries Alliance server racks, ensuring swift deployment in both enterprise and cloud-based data centers.

Tailoring MGX Solutions for your workload

Our team of expert engineers specializes in designing and re-architecting data centers that can maximize the capabilities of accelerated computing and AI. We understand that each data center has its unique set of demands and constraints. Therefore, we work closely with you to custom-tailor solutions that are not only cutting-edge but also highly efficient and cost-effective.

Our in-depth knowledge of the NVIDIA MGX architecture allows us to leverage its extensive features, ensuring that you get the most out of your investment. This means we can configure the ideal combination of core accelerators, CPUs, and storage options, all balanced to meet your specific use-case requirements. Our approach is holistic, considering the hardware, software, and existing infrastructure to make your deployment as smooth as possible . At AMAX, we provide more than just a solution, we deliver results to drive your business forward.

Our team of expert engineers specializes in designing and re-architecting data centers that can maximize the capabilities of accelerated computing and AI. We understand that each data center has its unique set of demands and constraints. Therefore, we work closely with you to custom-tailor solutions that are not only cutting-edge but also highly efficient and cost-effective.

Our in-depth knowledge of the NVIDIA MGX architecture allows us to leverage its extensive features, ensuring that you get the most out of your investment. This means we can configure the ideal combination of core accelerators, CPUs, and storage options, all balanced to meet your specific use-case requirements. Our approach is holistic, considering the hardware, software, and existing infrastructure to make your deployment as smooth as possible . At AMAX, we provide more than just a solution, we deliver results to drive your business forward.