Design Your Scalable NVIDIA H200 GPU Solution with AMAX

The NVIDIA H200 Tensor Core GPU: The world’s most powerful GPU supercharges AI and HPC

Engineer Your H200 Server with AMAX

At AMAX, we move quickly to make sure you have the most advanced options available when designing your HPC and AI infrastructure.

The NVIDIA H200 Tensor Core GPU supercharges generative AI and high-performance computing (HPC) with game-changing performance and memory capabilities. As the first GPU with HBM3e, H200’s faster, larger memory fuels the acceleration of generative AI and large language models (LLMs) while advancing scientific computing for HPC workloads.

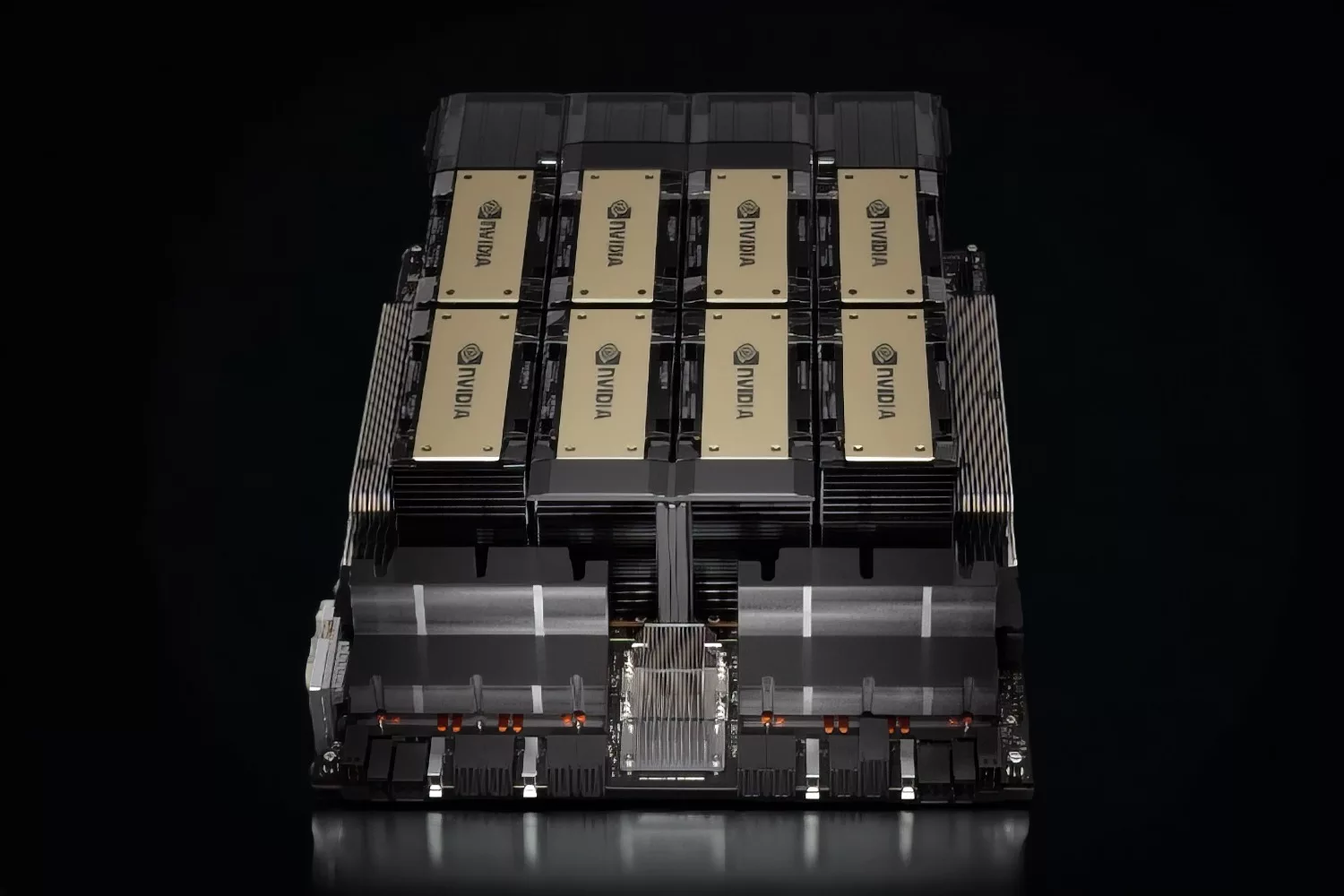

NVIDIA HGX™ H200, the world’s leading AI computing platform, features the H200 GPU for the fastest performance. An eight-way HGX H200 provides over 32 petaflops of FP8 deep learning compute and 1.1 terabytes (TB) of aggregate high-bandwidth memory for the highest performance in generative AI and HPC applications.

NVIDIA Certified H200 GPU Systems

Supercharged High-Performance Computing with AMAX

Memory bandwidth is crucial for high-performance computing applications, as it enables faster data transfer and reduces complex processing bottlenecks. For memory-intensive HPC applications like simulations, scientific research, and artificial intelligence, H200’s higher memory bandwidth ensures that data can be accessed and manipulated efficiently, leading to up to a 110X faster time to results. The NVIDIA data center platform consistently delivers performance gains beyond Moore’s Law. And H200’s breakthrough AI capabilities further amplify the power of HPC+AI to accelerate time to discovery for scientists and researchers working on solving the world’s most important challenges.

Supercharged High-Performance Computing with AMAX

Memory bandwidth is crucial for high-performance computing applications, as it enables faster data transfer and reduces complex processing bottlenecks. For memory-intensive HPC applications like simulations, scientific research, and artificial intelligence, H200’s higher memory bandwidth ensures that data can be accessed and manipulated efficiently, leading to up to a 110X faster time to results. The NVIDIA data center platform consistently delivers performance gains beyond Moore’s Law. And H200’s breakthrough AI capabilities further amplify the power of HPC+AI to accelerate time to discovery for scientists and researchers working on solving the world’s most important challenges.

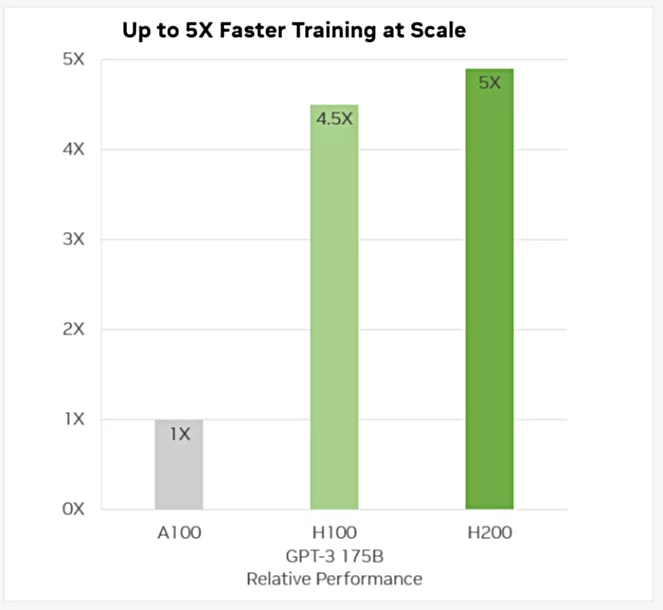

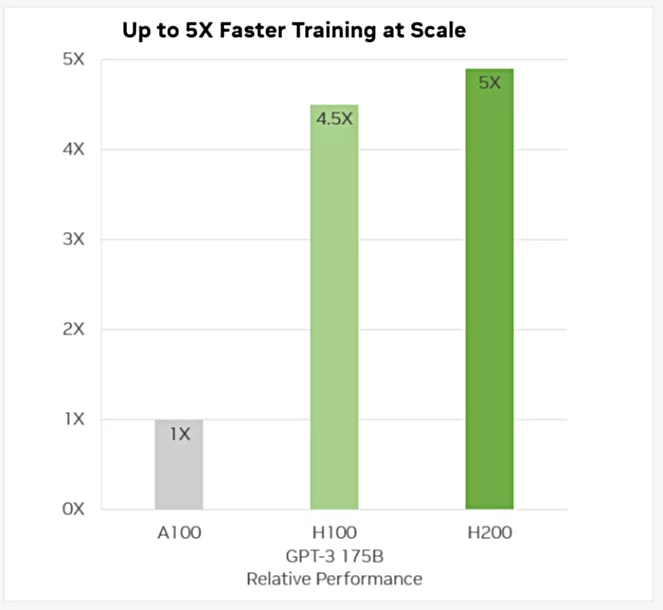

The NVIDIA H200 accelerates generative AI

The NVIDIA H200 Tensor Core GPU supercharges generative AI and HPC with game-changing performance and memory capabilities. As the first GPU with HBM3e, H200’s faster, larger memory fuels the acceleration of generative AI and LLMs while advancing scientific computing for HPC workloads. NVIDIA HGX™ H200, the world’s leading AI computing platform, features the H200 GPU for the fastest performance. An eight-way HGX H200 provides over 32 petaflops of FP8 deep learning compute and 1.1TB of aggregate high-bandwidth memory for the highest performance in generative AI and HPC applications.

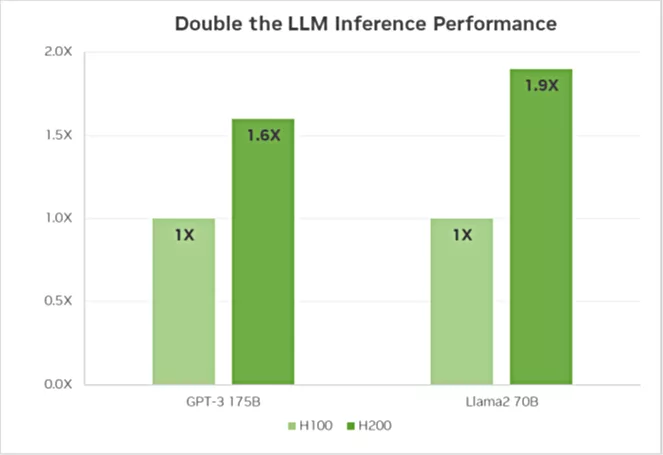

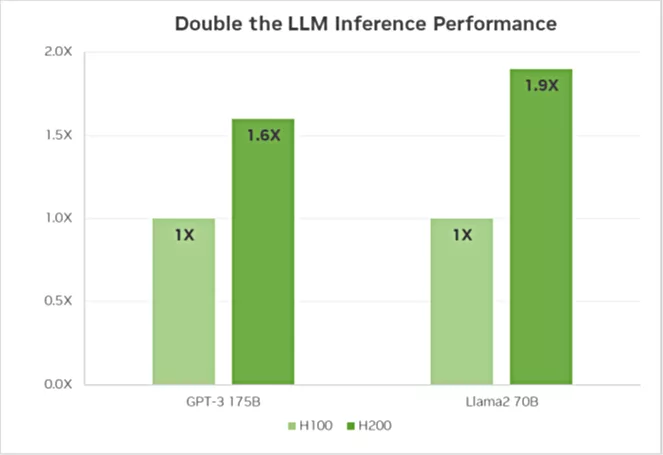

Unlock Insights With High-Performance LLM Inference

In the ever-evolving landscape of AI, businesses rely on large language models to address a diverse range of inference needs. An AI inference accelerator must deliver the highest throughput at the lowest TCO when deployed at scale for a massive user base. H200 doubles inference performance compared to H100 when handling LLMs such as Llama2 70B.

The NVIDIA H200 accelerates generative AI

The NVIDIA H200 Tensor Core GPU supercharges generative AI and HPC with game-changing performance and memory capabilities. As the first GPU with HBM3e, H200’s faster, larger memory fuels the acceleration of generative AI and LLMs while advancing scientific computing for HPC workloads. NVIDIA HGX™ H200, the world’s leading AI computing platform, features the H200 GPU for the fastest performance. An eight-way HGX H200 provides over 32 petaflops of FP8 deep learning compute and 1.1TB of aggregate high-bandwidth memory for the highest performance in generative AI and HPC applications.

Unlock Insights With High-Performance LLM Inference

In the ever-evolving landscape of AI, businesses rely on large language models to address a diverse range of inference needs. An AI inference accelerator must deliver the highest throughput at the lowest TCO when deployed at scale for a massive user base. H200 doubles inference performance compared to H100 when handling LLMs such as Llama2 70B.

NVIDIA NVLink and the NVIDIA H200 Tensor Core GPU

NVIDIA NVLink is the high-speed, point-to-point link that interconnects GPUs or GPUs to CPU. The fourth generation enables H200 to utilize 900GB/s of inter-GPU communications NVIDIA NVSwitch provides full connectivity within a node or externally for NVLink communications. NVSwitch accelerates collective operations with multicast and NVIDIA Scalable Hierarchical Aggregation and Reduction Protocol (SHARP)™ in-network reductions. NVIDIA NVLINK Switch Systems are 900GB/s fat tree networks that connect 32–256 NVIDIA Grace Hopper™ Superchips in an all-to-all communication network.

NVIDIA NVLink and the NVIDIA H200 Tensor Core GPU

NVIDIA NVLink is the high-speed, point-to-point link that interconnects GPUs or GPUs to CPU. The fourth generation enables H200 to utilize 900GB/s of inter-GPU communications NVIDIA NVSwitch provides full connectivity within a node or externally for NVLink communications. NVSwitch accelerates collective operations with multicast and NVIDIA Scalable Hierarchical Aggregation and Reduction Protocol (SHARP)™ in-network reductions. NVIDIA NVLINK Switch Systems are 900GB/s fat tree networks that connect 32–256 NVIDIA Grace Hopper™ Superchips in an all-to-all communication network.