Enabling High Performance NVMe-Over-InfiniBand Connectivity for the Advanced Storage Platforms

AMAX, together with Excelero, are delivering StorMax® all-flash and hybrid flash storage solutions, featuring 200Gb/s NVMe over Fabrics on InfiniBand with NVIDIA® Mellanox® ConnectX-6 adapters. StorMax® platforms are the highest performance, most secure and extremely flexible architectures in the market, with unmatched price-performance that accelerates all AI computing, database, big data analytics, cloud, web 2.0, and video processing workloads.

The StorMax® high performance storage solutions are designed to optimally pair with the latest NVIDIA A100 GPU-based compute solutions together with NVIDIA high-speed networking to supply optimized compute and storage I/O through streamlined parallelism, performance and scale.

Fast Performance in a Compact Form Factor

StorMax® A-1110NV

Form Factor: 1U

Processor: Single Socket AMD EPYC™ 7002 or 7003 series processor

Memory: 16 DIMM slots

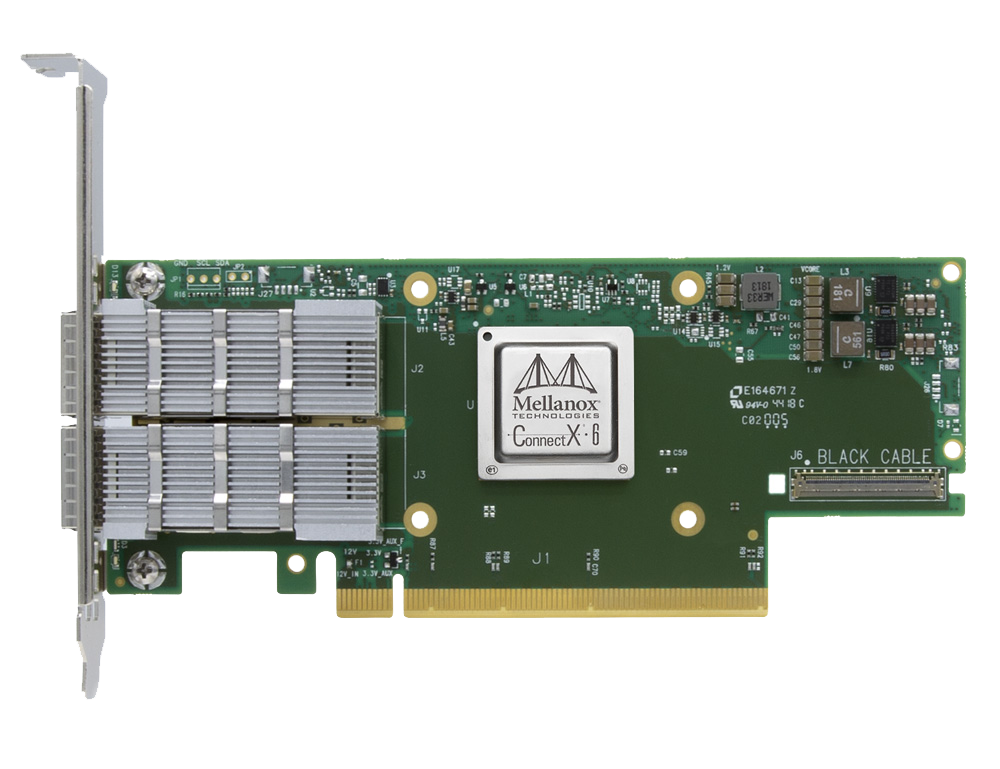

Networking: Dual-Port NVIDIA Mellanox ConnectX-6 VPI HDR 200GbE InfiniBand Adapter Card, On-board 2x 1GbE LAN ports

Drive Bays: 10x hot-swap 2.5″ U.2 NVMe drive bays

Reference No: Q713224

Request a quote

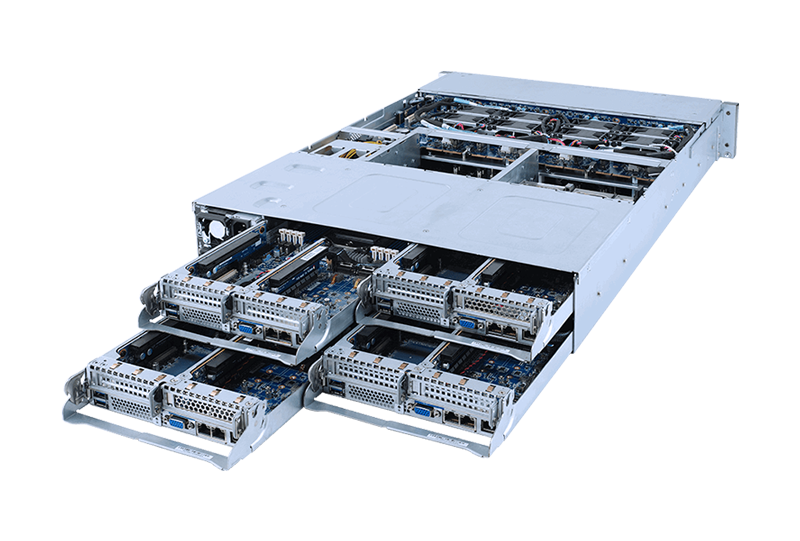

High Density, Fast Performance Storage Server

StorMax® A-2440

Form Factor: 2U

Processor: Single Socket AMD EPYC™ 7002 or 7003 series processor

Memory: 8 DIMM slots per node

Networking: Dual-Port NVIDIA Mellanox ConnectX-6 VPI HDR 200GbE InfiniBand Adapter Card, On-board 2x 1GbE LAN ports

Drive Bays: 24x 2.5″ hot-swap U.2 NVMe drive bays (6x bays per node)

Reference No: Q713225

Request a quote

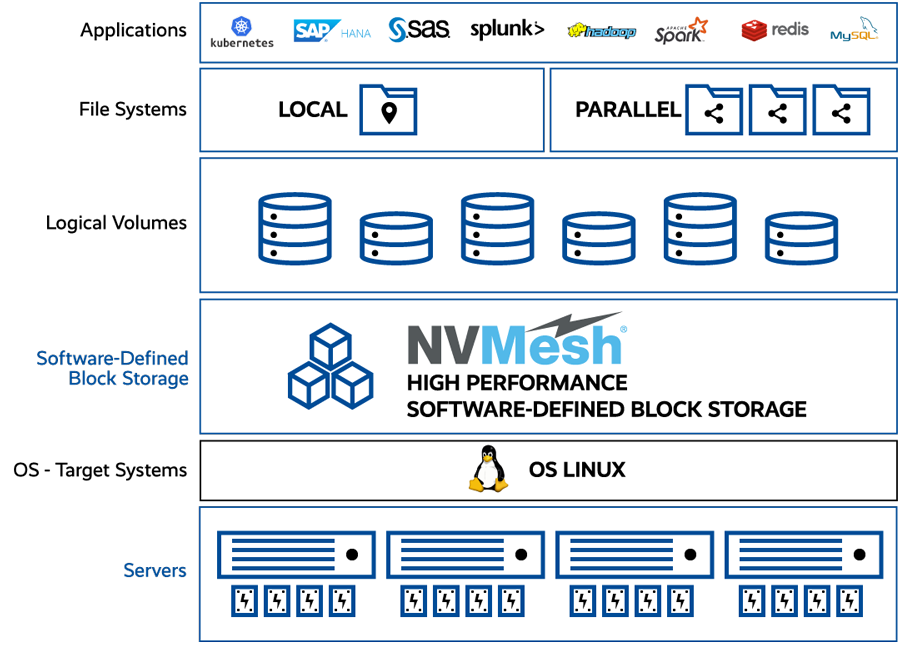

High Performance Storage Software Logical Architecture

In addition, StorMax 200Gb/s storage systems deliver low-latency distributed block storage for web-scale applications, enabling shared NVMe across any network and supports any local or distributed file system. These StorMax® solutions feature an intelligent management layer that abstracts underlying hardware with CPU offload, creates logical volumes with redundancy, and provides centralized, intelligent management and monitoring. All applications benefit from the ultra-low latency, extremely high throughput and high IOPs of a local NVMe device with the convenience of centralized storage while avoiding proprietary hardware lock-in and reducing the overall TCO.

The Demand For More Throughput – 200G HDR InfiniBand Solution

As the demand for data analysis grows, so too does the demand for higher data throughput to enable such detailed analysis. Whereas state-of-the -art applications to analyze automotive construction or weather simulations required 100Gb/s as recently as a few years ago, today’s high performance, machine leaning, storage and hyperscale technologies demand even faster networks. 100Gb/s bandwidth is not enough for many of today’s more advanced data centers.

Whether for brain mapping or for homeland security, the most demanding supercomputers and data center applications need to produce astounding achievements in remarkable turnaround times. Thus the race is on to develop 200Gb/s technologies that can support the requirements of today’s most advanced networks.

High throughput and low latency to train deep neural networks and to improve recognition and classification accuracy

The StorMax® series features Mellanox ConnectX-6 with Virtual Protocol Interconnect® offering two ports of 200Gb/s InfiniBand and Ethernet connectivity, sub-600 nanosecond latency, and 215 million messages per second. Mellanox ConnectX-6 cards bring crucial innovation to network security by providing block-level encryption; off-loaded by the ConnectX-6 hardware, improving latency and saving precious CPU cycles.

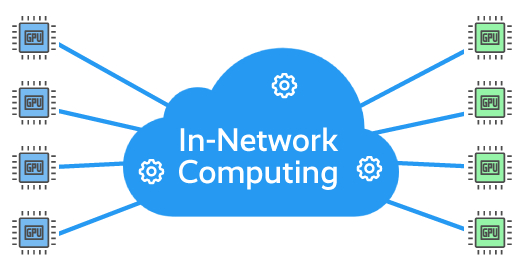

In-Network Computing and Security Offloads

ConnectX-6 and Quantum support the new generation of the data center architecture – the data-centric architecture, in which the network becomes a distributed processor. By adding additional accelerators, ConnectX-6 and Quantum enable In-Network Computing and In-Network Memory capabilities, offloading even further computation to the network, which saves CPU cycles and increases the efficiency of the network.

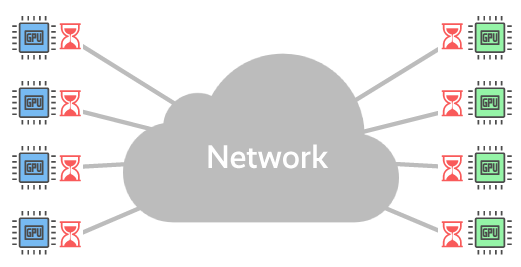

CPU-Centric (Onload)

Creates performance bottlenecks

Data-Centric (Offload)

Analyze data as it moves