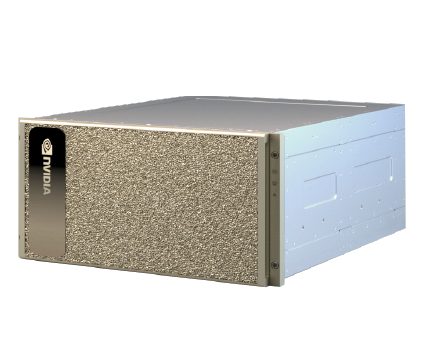

NVIDIA DGX™ A100

First AI System Built On New NVIDIA A100 GPU

- 8x NVIDIA A100 Tensor Core GPUs, which deliver unmatched acceleration

- Tensor Float 32 (TF32) for 20x higher FLOPS

- 8x NVIDIA A100 80 GB GPUs

- Available with up to 640 gigabytes (GB) of total GPU memory

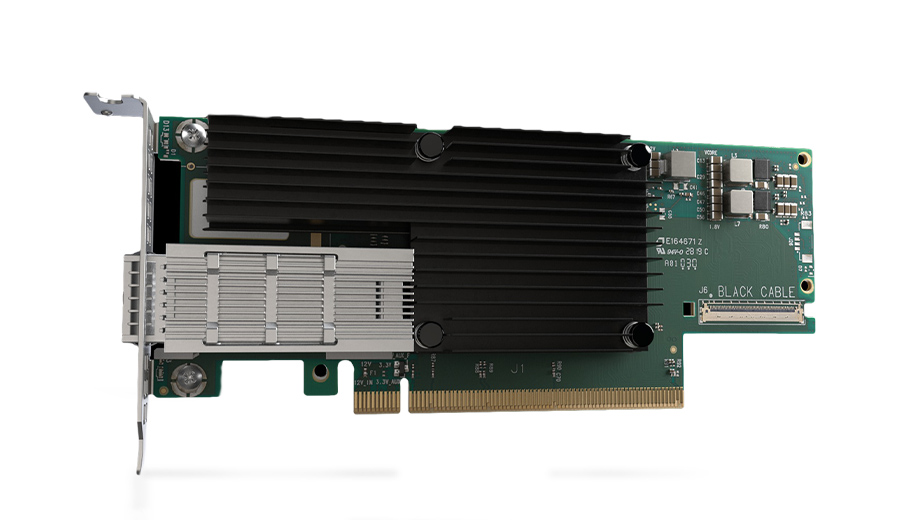

- 8x SinglePort Mellanox ConnectX-6 VPI 200Gb/s HDR InfiniBand

The Challenge of Scaling Enterprise AI

Every business needs to transform using artificial intelligence (AI), not only to survive, but to thrive in challenging times. However, the enterprise requires a platform for AI infrastructure that improves upon traditional approaches, which historically involved slow compute architectures that were siloed by analytics, training, and inference workloads. The old approach created complexity, drove up costs, constrained speed of scale, and was not ready for modern AI. Enterprises, developers, data scientists, and researchers need a new platform that unifies all AI workloads, simplifying infrastructure and accelerating ROI.

The Universal System for All AI Workloads

NVIDIA DGX™ A100 is the universal system for all AI workloads—from analytics to training to inference. DGX A100 sets a new bar for compute density, packing 5 petaFLOPS of AI performance into a 6U form factor, replacing legacy compute infrastructure with a single, unified system. DGX A100 also offers the unprecedented ability to deliver fine-grained allocation of computing power, using the Multi-Instance GPU (MIG) capability in the NVIDIA A100 Tensor Core GPU, which enables administrators to assign resources that are right-sized for specific workloads.

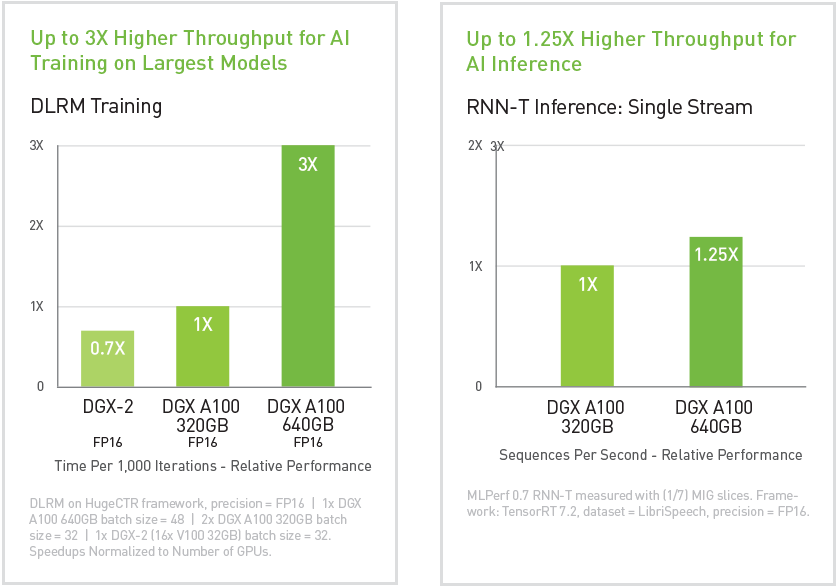

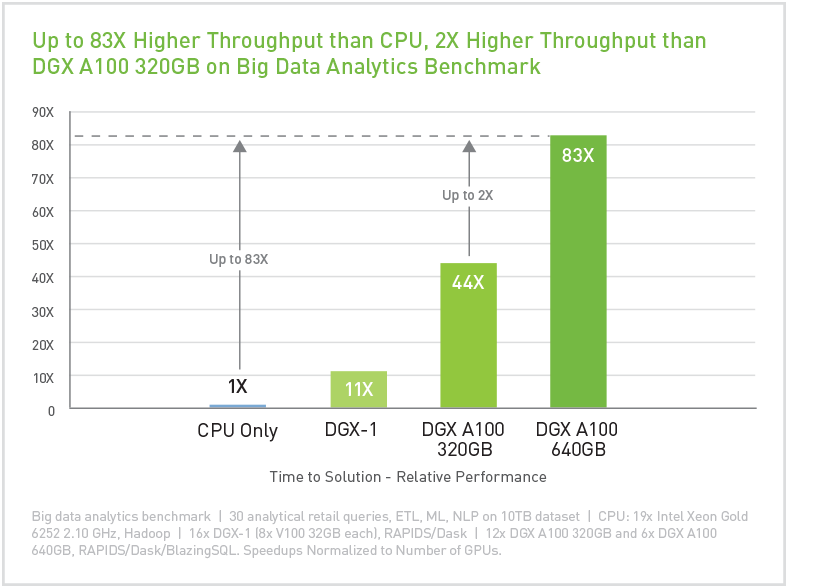

Available with up to 640 gigabytes (GB) of total GPU memory, which increases performance in large-scale training jobs up to 3X and doubles the size of MIG instances, DGX A100 can tackle the largest and most complex jobs, along with the simplest and smallest. Running the DGX software stack with optimized software from NGC, the combination of dense compute power and complete workload flexibility make DGX A100 an ideal choice for both single node deployments and large scale Slurm and Kubernetes clusters deployed with NVIDIA DeepOps.

IT Deployment Services Available

Do you want to shorten time to insights and accelerate ROI for AI? Let our professional IT team expedite, deploy and integrate the world’s first 5 petaFLOPS AI system, NVIDIA® DGX™ A100, into your infrastructure seamlessly without disruption and with 24/7 support.

Get the results and outcomes you need:

- Site survey analysis, readiness, pre-test and staging

- Access dedicated engineers, solution architects, and support technicians

- Deployment planning, scheduling and project management

- Shipping, logistics management and inventory staging

- Onsite installation, onsite or remote software configuration

- Post-deployment check-up, support, ticketing and maintenance

- Lifecycle management including design, upgrades, recovery, repair, and disposal

- Rack and stack and Integration services and multi-site deployment

- Custom tailored break-fix and managed service contracts

Learn more about our IT deployment services.

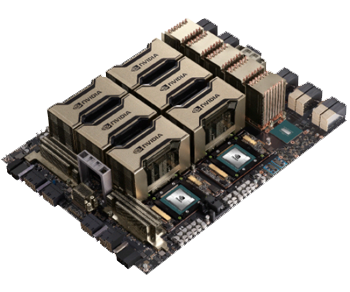

NVIDIA A100 Tensor Core GPU

- 8X NVIDIA A100 GPUS WITH UP TO 640 GB TOTAL GPU MEMORY

12 NVLinks/GPU, 600 GB/s GPU-to-GPU Bi-directonal Bandwidth - 6X NVIDIA NVSWITCHES

4.8 TB/s Bi-directional Bandwidth, 2X More than Previous Generation NVSwitch - 10X MELLANOX CONNECTX-6 200 Gb/s NETWORK INTERFACE

500 GB/s Peak Bi-directional Bandwidth - DUAL 64-CORE AMD CPUs AND 2 TB SYSTEM MEMORY

3.2X More Cores to Power the Most Intensive AI Jobs - 30 TB GEN4 NVME SSD

50 GB/s Peak Bandwidth, 2X Faster than Gen3 NVME SSDs

Technology Inside NVIDIA DGX A100

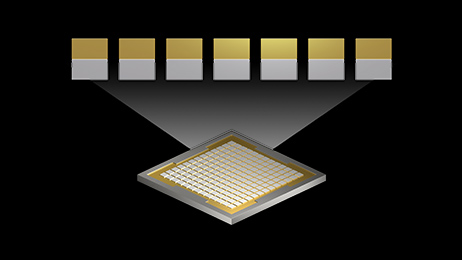

Multi-Instance GPU (MIG)

The eight A100 GPUs in DGX A100 can be configured into as many as 56 GPU instances, each fully isolated with their own high-bandwidth memory, cache, and compute cores.

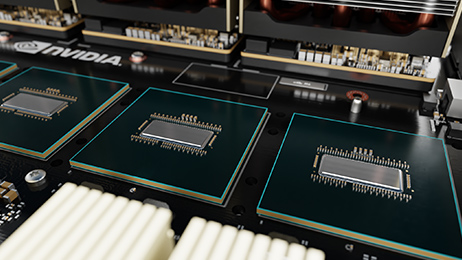

NVLink & NVSwitch

Third generation of NVIDIA® NVLink™ doubles the GPU-to-GPU direct bandwidth to 600 GB/s, almost 10X higher than PCIe Gen4 and next-generation NVIDIA NVSwitch™, which is 2X times faster than the previous generation.

Infiniband

New Mellanox ConnectX-6 VPI HDR InfiniBand/Ethernet adapters running at 200 gigabits per second (Gb/s) to create a high-speed fabric for large-scale AI workloads.

Optimized Software Stack

Integrated DGX software stack, including an AI-tuned base operating system, all necessary system software, and GPU-accelerated applications, pre-trained models, and more.

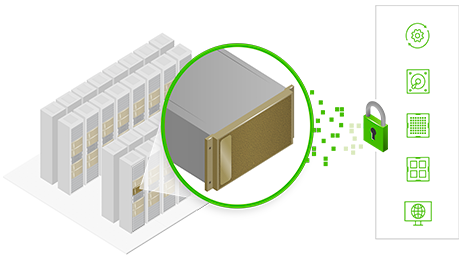

Build-in Security

Most robust security for AI deployments, with a multi-layered approach stretching across the baseboard management controller (BMC), CPU board, GPU board, self-encrypted drives, and secure boot.

Essential Building Block of the AI Data Center

The Universal System for Every AI Workload

One universal building block to run any workload anytime – from analytics, AI training to inference.

Integrated Access to AI Expertise

Fast-track AI transformation with NVIDIA DGXperts to help maximize the value of DGX investment.

Game-changing Performance for Innovators

Provides unprecedented acceleration with eight A100 GPUs and is fully optimized for NVIDIA CUDA-X™ software and end-to-end NVIDIA data center solution stack.

Unmatched Data Center Scalability

Build leadership-class AI infrastructure that scales to keep ahead of demand.

Unprecedented Performance

GPUs

NVIDIA DGX A100 640GB:

- 8x NVIDIA A100 80 GB GPUs

NVIDIA DGX A100 320GB:

- 8x NVIDIA A100 40 GB GPUs

GPU Memory

NVIDIA DGX A100 640GB:

- 640 total

NVIDIA DGX A100 320GB:

- 320 total

Performance

- 5 petaFLOPS AI

- 10 petaOPS INT8

CPU

Dual AMD Rome 7742, 128 cores total, 2.25 GHz (base), 3.4 GHz (max boost)

NVIDIA NVSwitches

6

System Power Usage

6.5kW max

System Memory

NVIDIA DGX A100 640GB:

- 2TB

NVIDIA DGX A100 320GB:

- 1TB

Networking

NVIDIA DGX A100 640GB:

- 8x Single-Port Mellanox ConnectX-6 VPI

200Gb/s HDR InfiniBand

2x Dual-Port Mellanox ConnectX-6 VPI

10/25/50/100/200 Gb/s Ethernet

NVIDIA DGX A100 320GB:

- 8x Single-Port Mellanox ConnectX-6 VPI

200Gb/s HDR InfiniBand

1x Dual-Port Mellanox ConnectX-6 VPI

10/25/50/100/200 Gb/s Ethernet

Storage

NVIDIA DGX A100 640GB:

- OS: 2x 1.92 TB M.2 NVME drives

Internal Storage: 30 TB (8x 3.84 TB) U.2 NVMe drives

NVIDIA DGX A100 320GB:

- OS: 2x 1.92TB M.2 NVME drives

Internal Storage: 15 TB (4x 3.84 TB) U.2 NVMe drives

Software

Ubuntu Linux OS

Also supports: Red Hat Enterprise Linux CentOS

System Weight

271.5 lbs (123.16 kgs) max

System Dimensions

- Height: 10.4 in (264.0 mm)

- Width: 19.0 in (482.3 mm) max

- Length: 35.3 in (897.1 mm) max

Packing System Weight

359.7 lbs (163.16 kgs) max

DGX Ready Colocation Partner

Don’t let infrastructure delay your AI ROI.

Our colocation partner, Colovore, make it easy to deploy, optimize, and scale your AI applications in the data center immediately.

- Instant access to GPU-ready data center facility certified by NVIDIA

- Scale easily with no power, cooling or distance limitations

- 24/7 IT support- Experienced onsite team of IT infrastructure and networking experts available 24/7