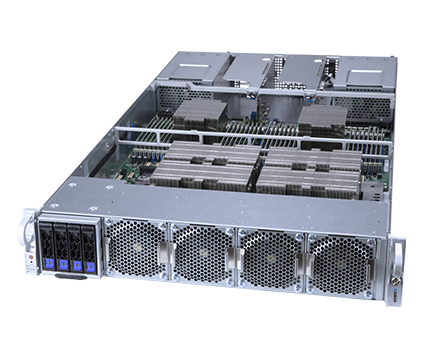

AceleMax DGS-224AS

2U Dual AMD EPYC Processor 4x NVIDIA A100 SXM4 GPU Server

- High density 2U System with four NVIDIA HGX A100 SXM4 GPUs

- High GPU Peer to Peer Communication via NVIDIA NVLINK

- Less latency with Direct Attached GPUs & Next Gen PCIe 4.0 Support

- Four NIC for GPU Direct Attach RDMA & 4 NVMe GPU Direct Storage

- Supports two AMD EPYC™ 7002 or 7003 series processors family

- Designed for VDI, machine intelligence, deep learning, machine learning, artificial intelligence, Neural Network, advanced rendering and compute

Reference # 4B28212

The AceleMax DGS-224AS is a high density 2U system with four NVIDIA® HGX™ A100 40GB or 80GB SXM4 GPUs, and support for high GPU Peer to Peer Communication via NVIDIA NVLINK, Direct Attached GPUs & Next Gen PCIe 4.0, dual AMD EPYC 7002 or 7003 series processors, eight NIC for GPU Direct Attach RDMA, and 4 NVMe GPU Direct storage, bringing huge parallel computing power to customers, thereby helping customers accelerate their digital transformation.

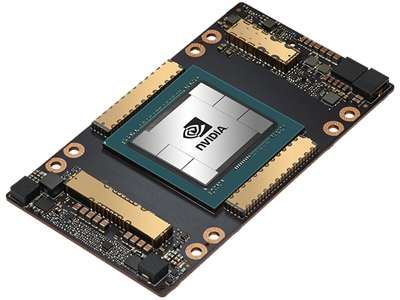

NVIDIA A100 SXM for HGX

The NVIDIA A100 Tensor Core GPU delivers unprecedented acceleration and flexibility to power the world’s highest-performing elastic data centers for AI, data analytics and HPC applications. As the engine of the NVIDIA data center platform, the A100 GPU provides up to 20X higher performance and 2.5X AI performance than V100 GPUs, and can efficiently scale up to thousands of GPUs or be partitioned into seven isolated GPU instances with new multi-Instance GPU (MIG) capability to accelerate workloads of all sizes.

The NVIDIA A100 GPU features third-generation Tensor Core technology that supports a broad range of math precisions providing a unified workload accelerator for data analytics, AI training, AI inference, and HPC. It also supports new features such as New Multi-Instance GPU, delivering optimal utilization with right sized GPU and 7x Simultaneous Instances per GPU; New Sparsity Acceleration, harnessing Sparsity in AI Models with 2x AI Performance; 3rd Generation NVLINK and NVSWITCH, delivering Efficient Scaling to Enable Super GPU, and 2X More Bandwidth than the V100 GPU. Accelerating both scale-up and scale-out workloads on one platform enables elastic data centers that can dynamically adjust to shifting application workload demands. This simultaneously boosts throughput and drives down the cost of data centers.

Combined with the NVIDIA software stack, the A100 GPU accelerates all major deep learning and data analytics frameworks and over 700 HPC applications. NVIDIA NGC, a hub for GPU-optimized software containers for AI and HPC, simplifies application deployments so researchers and developers can focus on building their solutions.

Applications:

AI, HPC, VDI, machine intelligence, deep learning, machine learning, artificial intelligence, Neural Network, advanced rendering and compute.

S Y S T E M

2U Rackmount

P R O C E S S O R S

Dual AMD EPYC™ 7002 or 7003 Processors

G P U

NVIDIA HGX A100 4x GPU 40GB

M E M O R Y

32 DIMM slots, up to 8TB DDR4 memory 3200 MHz DIMMs

D R I V E S

4x Hot-swap hybrid SATA/NVMe M.2 and SAS via HBA

I / O

4x PCIe 4.0 x16 LP, 1x PCIe 4.0 x8 LP

C O O L I N G F A N S

4x Removable heavy duty fans

P O W E R S U P P L I E S

2200W Titanium Level upgrade options: 3000W Redundant PWS

Processor

Dual AMD EPYC™ 7002 or 7003 series processor, 7nm, Socket SP3, up to 64 cores, 128 threads, and 256MB L3 cache per processor, TDP up to 280W

Memory

- 32 x DDR4 DIMM slots

- 8-Channel memory architecture

- Up to 8TB RDIMM/LRDIMM DDR4-3200 memory

Graphics Processing Unit (GPU):

- Supports 4 NVIDIA A100 40GB or 80GB SXM4 GPUs

- Supports PCIe Gen4: 64 GB/sec Third generation NVIDIA® NVLink® 600 GB/sec interconnect interface

- Up to 7 Multi-Instance GPU (MIG) instances

- Delivers 100% performance for Top applications

- Up to 27,648 FP32 CUDA Cores, 13,824 FP64 CUDA Cores, 1,728 Tensor Cores, 38.80 TF peak FP64 double-precision performance, 78 TF peak FP64 Tensor Core double-precision performance, 78 TF peak FP32 single-precision performance, 1,248 TF peak Bfloat16 performance, 1,248 TF peak FP16 Tensor Core half-precision performance, 4,992 TOPS peak Int8 Tensor Core Inference performance, and 160GB GPU memory, with four A100 SXM GPUs in a 2U chassis

- On-board Aspeed AST2600 graphics controller

Expansion Slots

- 4x PCI-E Gen 4 x16 (LP) slots

- 1x PCI-E Gen 4 x8 (LP) slot

Storage

- 4x hot-swap 2.5″ drive bays (SATA/NVMe Hybrid or SAS with optional HBA)

Server Management

- Support for Intelligent Platform Management Interface v.2.0

- IPMI 2.0 with virtual media over LAN and KVM-over-LAN support

Network Controller

- Dual RJ45 10GbE-aggregate host LAN

- 1x GbE management LAN

Power Supply

2000W Titanium level Redundant Power Supplies with PMBus

System Dimension

3.5″ x 17.2″ x 32.4″ / 89mm x 437mm x 823mm (H x W x D)

Optimized for Turnkey Solutions

Enable powerful design, training, and visualization with built-in software tools including TensorFlow, Caffe, Torch, Theano, BIDMach cuDNN, NVIDIA CUDA Toolkit and NVIDIA DIGITS.